The announcement of the Amazon Fire Phone is one of the most interesting technology news I’ve come across in recent times. While the jury is out on whether it will be a commercial success or not (UPDATE: recent estimates suggest it could be as low as 35,000), the features that the phone comes with got me thinking about the technical advancements that have made it possible.

The caveat here is much of what follows is speculation – but I do have a background in research projects in speech recognition and computer vision related user experience research. I’m going to dive into why Fire Phone’s features are an exciting advance in computing, what it means for the future of phones in terms of end-user experience, and a killer feature I think many other pundits are missing out.

Fire Phone’s 3D User Interface

I did my final year research project on using eye tracking on mobile user interfaces as a method of user research. The problem with many current methods of eye tracking is that it requires specialised hardware – typically the approach is to use a camera that can “see” in infrared, illuminate the user’s eye using infrared, and using the glint from the eye to track the position of the eyeball relative to the infrared light sources.

This works fabulously when the system is desktop-based. Chances are, the user is going to be within a certain range of distance from the screen, and facing it at a right angle. Since the infrared light sources are typically attached to corners of the screen – or an otherwise-known fixed distance – it’s relatively trivial to figure out the angles at which a glint is being picked up. Indeed, if you dive into research into this particular challenge in computer vision, you’ll mostly find variations of approaches on how to best use cameras in conjunction with infrared.

The drawback to this approach is that the complexity involved vastly increases when it comes to mobile platforms. To figure out the angle at which glint is being received, it’s necessary to figure out the orientation of the phone from it’s gyroscope (current position) and accelerometer (how quickly the pose of the phone is changing in the world). In addition to this, the user themselves might be facing the phone at an angle rather than facing it at a right angle, which adds another level of complexity in estimating pose. (The reason this is needed is to estimate visual angles.)

My research project’s approach was using techniques similar to a desktop-based eye tracking software called Opengazer coupled with pose estimation in mobiles to track eye gaze. Actually, before the Amazon Fire Phone there’s another phone which touted it had “eye tracking” (according to the NYT): Samsung Galaxy S IV.

I don’t actually have an Samsung Galaxy to play with – nor did the patent mentioned in the New York Times article link above show any valid results – so I’m basing my guesses on demo videos. Using current computer vision software, given the proper lighting conditions, it’s easy to figure out whether the “pose” of a user’s head has changed: instead of a big, clean circular eyeball, you can figure out there’s an oblong eyeball instead which suggests the user has tilted their head up or down. (The “tilt device” option for Samsung’s Eye Scroll, on the other hand, isn’t eye tracking at all as it’s just using the accelerometer / gyroscope to figure out the device is being tilted.)

What I don’t think the Samsung Galaxy S IV can do with any accuracy is pinpoint where a user is looking at the screen beyond the “it’s changed from a face at right angle to something else”.

What makes the Fire Phone’s “3D capabilities” impressive?

Watch the demo video above of Jeff Bezos showing off the Fire Phone’s 3D capabilities. As you can see, it goes beyond the current state-of-the-art that the Galaxy S IV has – in the sense that to accurately follow and tilt the perspective based on a user’s gaze, the eye tracking has to be incredibly accurate. Specifically, instead of merely basing motion on how the device is tilted or how the user moves their head from a right angle perspective, it needs to combine device tilt pose, head tilt / pose, as well as computer vision pattern recognition to figure out the visual angles the user is looking at an object from.

Here’s where Amazon has another trick up its sleeve. Remember how I mentioned that glints off infrared light sources can be used to track eye position? Turns out that the Fire Phone uses precisely that setup – it has four front cameras, each with its own individual infrared light source to accurately estimate pose along all three axes. (And in terms of previous research, most desktop-based eye tracking systems that are considered accurate also use at least three fixed infrared light sources.)

So to recap, here’s my best guess on how Amazon is doing it’s 3D shebang:

- Four individual cameras, each with it’s own infrared light source. Four individual image streams that need to be combined to form a 3D perspective…

- …combined with device position in the real world, based on its gyroscope…

- …and how quickly that world is changing based on its accelerometer

Just dealing with one image stream alone, on a mobile device, is a computationally complex problem in its own right. As hardware becomes cheaper, more smartphones include higher resolution front cameras (also, better image sensor density so that isn’t just the resolution but the quality which is better)…which in turn gives better quality images to work on…but it also creates another problem in that there’s a larger image to now process onboard a device. This is a challenge because, based on psychological research into how people tend to perceive visual objects, there’s a narrow window – within the range of 100s of milliseconds – within which a user’s gaze rests at a particular area.

On a desktop-class processor, doing all of this is a challenge. (This article is a comparison of JavaScript on ARM processors vis-a-vis desktop, but the lessons are equally valid for other, more computationally complex tasks as computer vision.) What the Amazon Fire Phone is doing is it’s combining images from four different cameras as well as its sensors to form an image of the world to change perspective…in real-time. As someone who’s studied computer vision, this is incredibly exciting advance in the field!

My best guess on how they’ve cracked this would be to use binary segmentation instead of feature extraction. That was the approach I attempted when working on my project, but I could be wrong.

Is a 3D user interface a good idea though?

Right now, based purely on the demo, it seems that the 3D interface is a gimmick that may fly well when potential customers are in a store testing out the product. It could be banking on “Wow, that’s really cool”, as Amazon’s marketing seems to be positioning itself. Personally, I felt the visual aesthetics were less 21st century and more like noughties 3D screensavers on Windows desktops.

Every time a new user interface paradigm like Fire Phone’s Dynamic Perspective, or Leap Motion controller comes along, I’m reminded of this quote from Douglas Adams’ The Hitchhiker’s Guide To The Galaxy (emphasis mine):

For years radios had been operated by means of pressing buttons and turning dials; then as the technology became more sophisticated the controls were made touch-sensitive – you merely had to brush the panels with your fingers; now all you had to do was wave your hand in the general direction of the components and hope. It saved a lot of muscular expenditure of course, but meant that you had to sit infuriatingly still if you wanted to keep listening to the same programme.

My fear is that in an ever-increasing arms race to wow customers with new user interfaces, companies will go too far in trying to incorporate gimmicks such as Amazon’s Dynamic Perspective or Samsung’s Eye Scroll. Do I really want my homescreen or what I’m reading to shift away if tilt my phone one way or the other, like Fire Phone does? Do I really want the page to scroll based on what angle I’m looking at the device, like Samsung does? Another companion feature on the Galaxy S IV, called Eye Pause, pauses video playback if the user looks away. Anecdotally, I can say that I often “second screen” by browsing on a different device while watching a TV show or a film…and I wouldn’t want playback to pause merely because I flick my attention between devices.

Another example of unintended consequences of new user interfaces is the Xbox One advert featuring Breaking Bad‘s Aaron Paul. Since the Xbox One comes with speech recognition technology, playing the advert on TV inadvertently turns viewers’ Xboxes on. Whoops.

What’s missing in all of the above examples is context – much like what was illustrated by Douglas Adams’ quote. In the absence of physical, explicit controls, interfaces that rely on human interaction can’t distinguish whether a user meant to change the state of a system or not. (Marco Arment talks through this “common sense” approach to tilt scrolling used in Instapaper.)

One of the things that I learnt during my research project was there’s a serious lack of usability studies for mobile devices in real-world environments. User research on how effective new user interfaces are – not just in general terms, but also at the app level – needs to dug into deeper to figure out what’s going on in the field.

In the short-term, I don’t think sub-par interfaces such as the examples I mentioned above will become mainstream, because the user experience is spotty and much less reliable. Again, this is pure conjecture because as I pointed out, there’s a lack of hard data on how users actually behave with such new technology. My worry is that if that such technologies become mainstream (they won’t right now; patents) without solving the context problem, we’ll end up in a world with hand gesture sensitive radios are common purely because “it’s newer technology, hence, it’s better”.

(On a related note: How Minority Report Trapped Us In A World of Bad Interfaces)

Fire Phone’s Firefly feature: search anything, buy anything

Staying on the topic of computer vision, another one of the headline features for Amazon’s Fire Phone is a feature called Firefly – which allows users to point their camera at an object and have it ready to buy. Much of the analysis around Fire Phone that I’ve read focuses on the “whale strategy” of getting high-spending users to spend even more.

While I do agree with those articles, I wanted to bring in another dimension into play by talking about the technology that makes this possible. Thus far, there has been a proliferation of “showrooming” thanks to barcode scanner apps that allow people to look up prices for items online…and so the thinking goes, a feature like Firefly which reduces friction in the process will induce that kind of behaviour further and encourage people to shop online – good for Amazon, because they get lock-in. Amazon went so far as to launch a standalone barcode scanning device called the Amazon Dash.

My hunch – from my experience of computer vision – is that the end-user experience using a barcode scanner versus the Firefly feature will be fundamentally different in terms of reliability. (Time will tell whether it’s better or worse.) Typically for a barcode scanner app:

- Users take a macro (close-up) shot of the barcode: What this ensures is that even in poor lighting conditions, there’s a high quality picture of the object to be scanned. Pictures taken at an angle can be skewed and transformed to a flat picture before processing, and this is computationally “easy”.

- Input formats are standardised: Whether it’s a vertical line barcode or a QR code or any of the myriad formats, there’s a limited subset of patterns that need to be recognised. Higher probability that pattern recognition algorithms can find an accurate match.

Most importantly, thanks to standardisation in retail if the barcode conforms to Universal Product Code or International Article Number (the two major standards), any lookup can be accurately matched to a unique item (“stock keeping unit” in retail terminology). Once the pattern has been identified, a text string is generated that can be quickly looked up in a standard relational database. This makes lookups accurate (was the correct item identified?) and reliable (how often is the correct item identified?).

I haven’t read any reviews yet on how accurate Amazon Firefly is since the phone has just been released. However, we can get insights into how it might work since Amazon released a feature called Flow for their iOS app that does basically the same thing. Amazon Flow’s own product page from it’s A9 search team doesn’t give much insight and I wasn’t able to find patents related to this might have been filed. I did come across a computer vision blog though that covered similar ground.

Now, object recognition on its own is a particularly challenging problem in computer vision, from my understanding of the research. Object recognition – of the kind Amazon Firefly would need – works great when the subset is limited to certain types of objects (e.g., identifying fighter planes against buildings) but things become murkier if the possible subset of inputs is anything that a user can take a picture of. So barring Amazon making a phenomenal advance in object recognition that could recognise anything, I knew they had to be using some clever method to short-circuit the problem.

The key takeaway from that image above is that in addition to object recognition, Amazon’s Flow app quite probably uses text recognition as well. Text recognition is simpler task because subset of possibilities is limited to the English alphabet (in the simplest example; of course, it can be expanded to include other character sets). My conjecture is that Amazon is actually using text recognition rather than object recognition; it’s extracting the text that it finds on the packaging of a product, rather that trying to figure out what an item is merely based on its shape, colour, et al. News articles on the Flow app seem to suggest this. From Gizmodo:

In my experience, it only works with things in packaging, and it works best with items that have bold, big typography.

Ars Technica tells a similar story, specifically for the Firefly feature:

When scanning objects, Firefly has the easiest time identifying boxes or other packaging with easily distinguishable logos or art. It can occasionally figure out “naked” box-less game cartridges, discs, or memory cards, but it’s usually not great at it.

If this is indeed true, then the probability is that the app is doing text recognition – and then searching for that term on the Amazon store. This leaves open the possibility that even though the Flow app / Firefly can figure out the name of an item, it won’t necessarily know the exact item stock type. Yet again, another news article seems to bear this out. From Wired:

And while being able to quickly find the price of gadgets is great, the grocery shopping experience can sometimes be hit-or-miss when it comes to portions. For example, the app finds the items scanned, but it puts the wrong size in the queue. In one instance, it offered a 128-ounce bottle of Tabasco sauce as the first pick when a five-ounce bottle was scanned.

From a computer vision perspective, this is not surprising since items with the same name but different sizes might have the same shape…and based on the distance a picture is shot at, the best guess of a system that combines text + shape search may not find an exact match in a database. It also a significantly more complex database query as it needs to compare multiple feature sets which may not necessarily be easily stored in a relational database (think NoSQL databases).

How does all of the above impact the usage of Firefly feature?

The specifics on what Amazon Firefly / Flow can and cannot do will have a significant impact on usage. If it can identify objects and items based on shape, in the absence of packing or name, then Amazon Firefly will be a game changer. It’s a hitherto unprecedented use case that can allow people to buy items they don’t even know the exact name for, and hence it creates an opportunity to buy items (from Amazon of course) which they otherwise may not have been able to buy.

If, however, Amazon Firefly can merely read bold text on a bottle / packaging, then it will remain a gimmick. Depending on how good the text recognition is, users may find no significant benefit compared to typing out the name of a product which is clearly visible.

…but even if Firefly isn’t amazing yet, Amazon has a plan

One of the fundamental problems in trying to solve computer vision problems (such as object recognition) is that researchers need datasets to test out algorithms on. Good datasets are incredibly hard to find. Often, these are created by academic research groups are thereby restricted by limited budgets in the range of pictures gathered in a database.

Even if, right now, Amazon Firefly can only do text recognition, releasing the Flow app and the Firefly feature into the wild allows them to capture a dataset of images of objects at an industrial scale. Presumably, if it identifies an object incorrectly and a user corrects it to the right object manually, this is incredibly valuable data which Amazon can then use to fine-tune their image recognition algorithms.

Google has a similar app as well called Google Goggles (Android only) which can identify a subset of images, such as famous pieces of art, landmarks, text, barcodes, etc. At the moment, Google isn’t using this specifically in a shopping context – but you can bet they will be collecting data to make those use cases better.

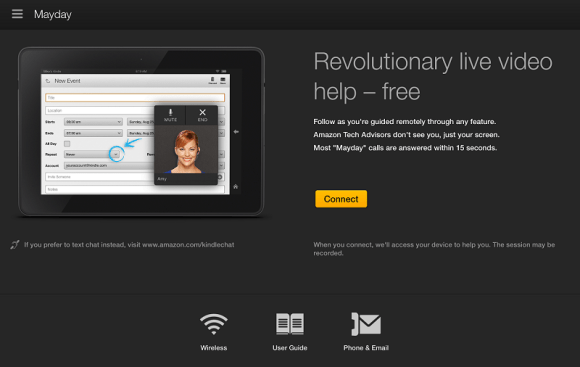

Dark horse feature: instant tech support through Mayday

Among all of the reviews that I’ve read for Amazon Fire Phone in tech blogs / media, precisely one acknowledged the Mayday tech support feature as a selling point: Farhad Manjoo’s article in the New York Times. Perhaps tech beat writers who would rather figure out things themselves didn’t have much need for it, and hence skipped over it. Mayday provides real-time video chat 24 / 7 with an Amazon tech support representative, with the ability for them to control and draw on the user’s screen to demonstrate how to accomplish a task.

Terms such as “the next billion” often get thrown about for the legions of people about to upgrade to smartphones. This could be in developing countries, but also for people in developed countries looking to upgrade their phones. Instant tech support, without having to go to a physical store, would be a killer-app for many inexperienced smartphone users. (Hey, maybe people just want to serenade someone, propose marriage, or order pizza while getting tech support.)

I think that fundamentally, beyond all the gimmicky features like Dynamic Perspective, the Mayday feature is what is truly revolutionary – in terms how Amazon has been able to scale it provide a live human to talk to within 10 seconds (not just from a technical perspective, but also how to run a virtual contact centre.) Make no mistake that while Amazon may have been the first to do it at scale, this is the way the future of customer interaction lies. The technology behind Mayday could easily be offered as a white-label solution to enterprises – think “AWS for contact centres” – or in B2C to offer personalised recommendations or app-specific support.

(Also check out this analysis of how Amazon Mayday uses WebRTC infrastructure.)

What comes next from Amazon?

There aren’t sales figures yet for Amazon Kindle Fire phone. Don’t hold your breath for them either: Amazon is famous for its opaque “growth” charts in all its product unveiling events. It may be able to push sales through the valuable real estate it has on Amazon.com home page – or it might fall flat. Regardless of what happens, this opacity does afford Amazon the luxury of refining its strategy in private. (Even if sells 100 units vs 10 units in the previous month, you can damn well be sure they’ll have a growth chart showing “100% increase!!!”)

One thing that’s worth bearing in mind that Jeff Bezos has demonstrated a proclivity towards the long game, rather than short-term gains. Speaking to the New York Times, he says:

We have a long history of getting started and being patient. There are a lot of assets you have to bring to bear to be able to offer a phone like this. The huge content ecosystem is one of them. The reputation for customer support is one of them. We have a lot of those pieces in place.

You can see he touches on all points I mentioned in this article: ecosystem, research, customer support. Amazon’s tenacity is not to be discounted. Even if this version one Fire Phone is a flop, they’re sure to iterate on making the technology and feature offerings better in the next version.

Personally, I’m quite excited to see how baby steps are being taken in terms of using computer vision (Fire Phone, Word Lens!), speech recognition (Siri, Cortana), and personal assistants (Google Now). Let the games begin!